Some data I’ve found interesting…

I just spent the week with my older sister, and left reminded of how magnetic a personality she has.

We laugh at this, but she always says the trick to social success (and anxiety) is to have three recent stories prepared that you can weave into any conversation.

So, with that as inspiration, here are three pieces of data I’ve found interesting this week. They’re not quite stand-alone posts, but all three are things that caught my eye and I’ve been thinking about:

Circle’s growing market share in the stablecoin space

Open-source traction from the latest Y Combinator batch

How AI is starting to outperform humans in predicting real-world events

Circle's growing market share in the stablecoin space

This week, all of Crypto Twitter saw this Tweet from Token Terminal: “ICYMI: @solana is the biggest chain for $USDC, based on monthly USDC senders.”

But what’s more interesting to me is this:

USDC’s share of the stablecoin market is ~38%, whereas last year it was at ~20%.

Of course, Circle’s IPO gave the market a lot of confidence in USDC. More importantly, though:

This matters because it shows that more users and institutions are shifting towards Circle’s more regulated stablecoin and shifting away from Tether, which has long been dominant.

Stablecoin senders (monthly) for Circle and other Stablecoin issuers

Tether: ~60.8%, Circle: ~38.8%

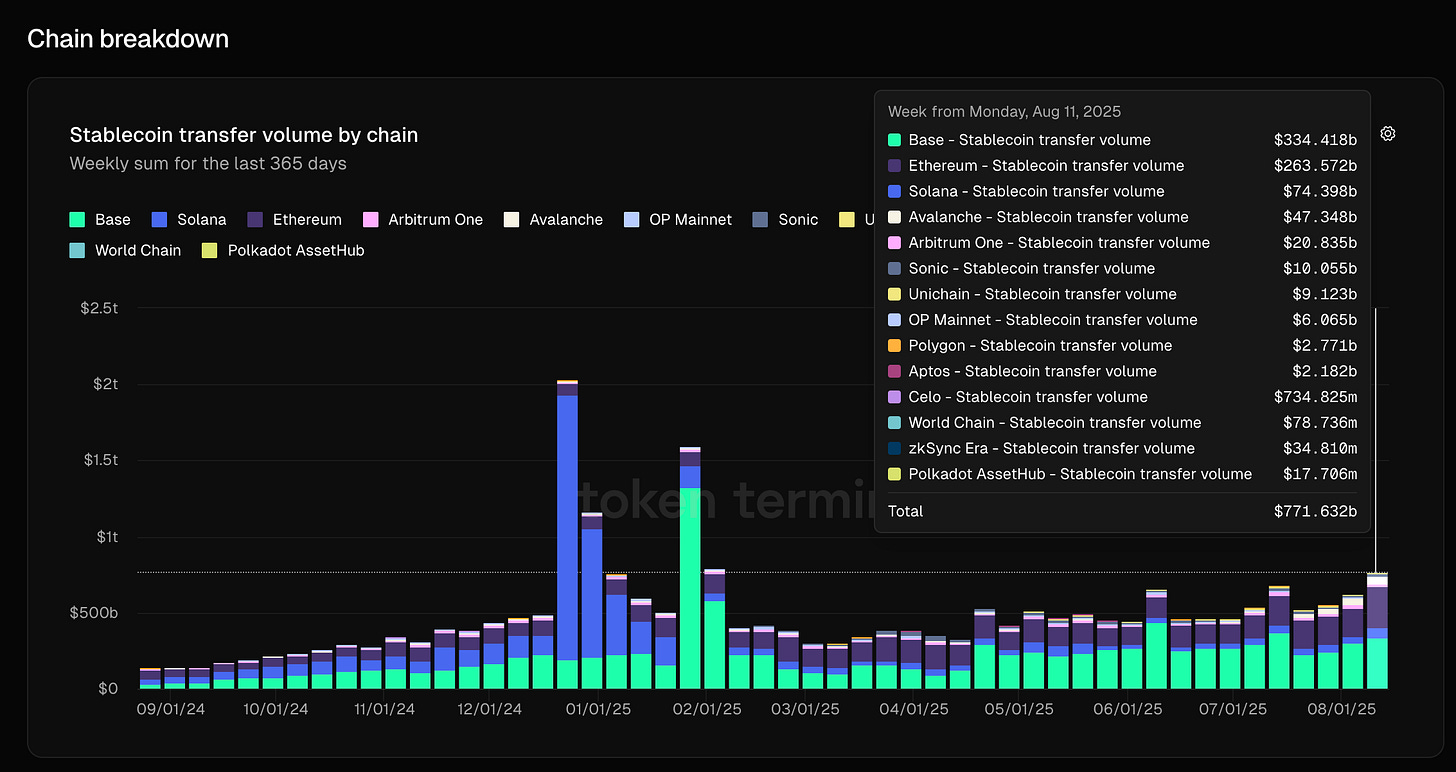

Circle stablecoin transfer volume by chain

Base: ~43.3%, Ethereum: ~34.2%, Solana: ~9.6%

Open-source traction from the latest YC batch

If you’ve read my previous posts, you’ll know that we track YC internally, and that I always find the data interesting since it provides some snapshot of what’s going on in the market (e.g. What's Working for YC Companies Since the AI Boom).

Last batch, we invested in Better Auth - a modern, developer-friendly authentication and authorization platform - and whose GitHub metrics were off the charts.

Here are some of the top-starred open-source repos from this Summer batch:

Pangolin - fosrl - Stars: 13,852

Identity-Aware Tunneled Reverse Proxy Server with Dashboard UImcp-use - mcp-use - Stars: 6,721

mcp-use is the easiest way to interact with mcp servers with custom agentsstagewise - stagewise-io - Stars: 5,837 - check out the demo on the website, it’s very cool

stagewise is the first frontend coding agent for existing production-grade web apps 🪄 -- Lives inside your browser 💻 -- Makes changes in local codebase 🤓 -- Compatible with all kinds of frameworks and setups 💪Hyprnote - fastrepl - Stars: 5,380

Local-first AI Notepad for Private MeetingsMagnitude - magnitudedev - Stars: 3,520

Open-source, vision-first browser agentCactus - cactus-compute - Stars: 2,850

Cross-platform framework for deploying LLM/VLM/TTS models locally on smartphones.Epicenter - epicenter-so - Stars: 1,973

Press shortcut → speak → get text. Free and open source. More local-first apps soon ❤️Omnara - omnara-ai - Stars: 1,702

Omnara (YC S25) - Talk to Your AI Agents from Anywhere!nottelabs - nottelabs - Stars: 1,488

🔥 Reliable Browser AI agents (YC S25)Kernel - onkernel - Stars: 475

Browsers-as-a-service for automations and web agents

Obviously, for developer tools and infra, open-source stars aren’t everything, but they are a fast proxy for developer interest and pull, which is worth noting. This batch shows continued momentum in agents, local-first, and dev-infra.

How AI is starting to outperform humans in predicting real-world events

You might’ve already seen this, but the University of Chicago launched a new open-evaluation platform called “Prophet Arena,” where AI models make live predictions (e.g. sports, politics, etc.) and get scored against real-money markets like Kalshi and Polymarket.

Announcement Tweet here: https://x.com/ProphetArena/status/1956928877106004430

The example that everyone is talking about is that in a recent Major League Soccer match, the market gave Toronto FC an 11% chance to win, while OpenAI’s o3-mini put the odds at 30% after “reading” news and team data.

Toronto won, and that single bet returned ~9× the market’s implied odds.

What’s even more interesting was looking at accuracy vs. average returns: GPT-5 was the most accurate overall, but o3-mini ended up being more profitable. It took bigger bets when it disagreed with the market - and that paid off. Data here.

Why this is awesome:

I love the distinction between being accurate and profitable. So GPT-5 might have been better on average at predicting outcomes correctly, but o3-mini was better at finding odds that were mispriced in the market.

When ChatGPT first launched, I met someone building an AutoGPT-style agent for deeper, chained reasoning. One of the breakout use cases on his Discord was sports betting predictions. It was an early sign that LLMs would be used for making money - especially in areas like trading and prediction - and only get better over time. This furthers that notion.

This is the core idea from Can AI Predict The Future: models aren’t just doing next-token prediction anymore. They’re getting better at forming a view of the world, and that’s a different level of capability.

It’s interesting to see LLMs get benchmarked outside coding and writing tasks. Related, I recently read Jennifer Shahade’s post on Kaggle’s chess AI tournament, where LLMs went head-to-head. o3 won, sweeping Grok in the final. The whole article is awesome and worth reading:

Outro

I’ll leave it at those three headlines for now. Thanks for reading!

I’m a General Partner at Chapter One, an early-stage venture fund that invests $500K - $2M checks into pre-seed and seed-stage startups.

If you’re a founder building a company, please feel free to reach out on Twitter (@seidtweets) or Linkedin (https://www.linkedin.com/in/jamesin-seidel-5325b147/).

Thanks for talking about mcp-use, I'm the cofounder!